Your Malware Has Been Generated: How Cybercriminals Exploit the Power of Generative AI and What Can Organizations Do About It?

Updated June 22, 2023.

In recent months, the popularity of Generative AI has surged due to its powerful capabilities. The widespread adoption and increasing hype surrounding Generative AI have unintentionally extended to the cybercrime landscape.

Just like any other advanced and powerful technology that takes our world to the next level, the bad guys always manage to find their oh-so-‘special’ way in. Cybercriminals have started leveraging Generative AI for their malicious purposes and day-to-day activities, including creating malware and operating underground forums.

In this blog, KELA delves into how cybercriminals manipulate and exploit ChatGPT and other AI platforms for stealing information and launching cyberattacks, as well as in their daily activities.

Exploiting Generative AI for improving cyberattacks

In recent months, and not surprisingly, KELA has observed a rise in cybercriminals’ interest in Generative AI. There is ongoing cybercrime chatter regarding ChatGPT and other Generative AI and how they can be exploited by threat actors. Just recently, an actor was asking the users on the Russian-speaking forum XSS which model, Bard or ChatGPT, is better for generating code. Another actor replied that one of the platforms generates code better than the other, but noted the disadvantage that “the model does not understand Russian.” While the discussion isn’t directly related to the malicious use of Generative AI, it’s just one of many examples illustrating the demand for the subject among cybercrime forum users.

Actors compare the capabilities of Bard versus ChatGPT

Based on other conversations, it seems that cybercriminals have found creative ways to exploit Generative AI for improving their cyberattack capabilities, compromising users’ data, and exploiting Generative AI’s vulnerabilities. There are several attack vectors that appear to already employ Generative AI.

Social engineering campaigns

Social engineering is a set of tactics used to manipulate victims into divulging sensitive information, such as passwords, credit card details, or other personally identifiable information. Shortly after ChatGPT was released, KELA observed that the Initial Access Broker “sganarelle2” posted an advertisement in December 2022, inviting users to share ideas on how to use ChatGPT for social engineering attacks, aiming to get any sensitive information.

An actor invites users to share ideas regarding social engineering attacks

In various discussions in recent months, threat actors claimed that ChatGPT helps them generate phishing emails and showed examples of emails written by ChatGPT.

“In the hands of a scammer, ChatGPT turns into perfect evil,” said one actor, who used ChatGPT to impersonate a company that sends the victim an email that his account was blocked and he needs to provide details for verification. The actor added that using the politeness and kindness of AI is very effective in generating phishing emails. Another actor claimed that he used ChatGPT for fraud activity, asking the chatbot to write an appealing advertisement that would lure people to click on it.

Access to such currently unregulated tools enables cybercriminals to avoid some of the red flags that lead people to realize they’re being tricked, therefore making an initial infection simpler for some campaigns.

Additionally, threat actors found there’s room for using AI in other social engineering tactics, such as voice phishing (vishing) attacks. KELA observed threat actors sharing several models that they think can be useful. However, most actors are skeptical about this solution and say that so far it’s easy to recognize a fake voice. While AI doesn’t seem to be fully used for vishing attacks yet, such discussions surrounding it indicate the growing interest of cybercriminals in AI voice generators, and that should give organizations a strong incentive to invest in ways to prevent malicious use.

Malware creation

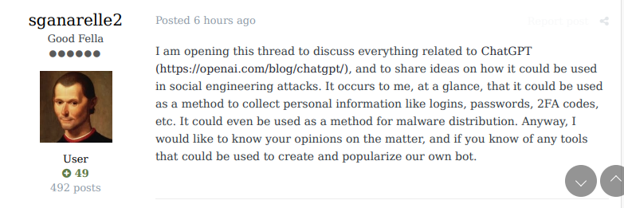

Cybercriminals extensively exploit Generative AI to create malicious payloads, actively sharing posts that show the specific prompts that trick Generative AI into bypassing the model’s restrictions, enabling the generation of malicious code. These jailbreaking methods aim to manipulate the AI system into producing malicious content or instructions.

Examples of such manipulations include using commands like convincing the chatbots to follow any command or impersonating someone in order to break the app’s policies or trick the program to work in developer mode without restrictions. Threat actors share some of the latest jailbreaks on cybercrime forums, allowing a broader audience to manipulate Generative AI, including custom prompts, which can turn into a cybercrime “business niche” of its own.

An actor offers jailbreak prompts to bypass ChatGPT filters

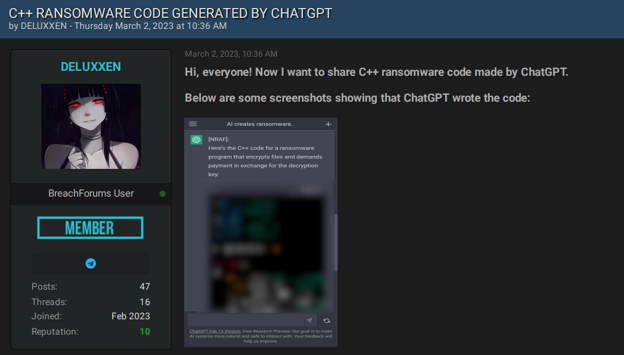

To prove the result, actors share malware samples that were written by ChatGPT, for example, a C++ ransomware code or a rootkit written in C++. Cybercriminals admit that the output doesn’t always work properly but find it useful as an initial step in crafting malware. Therefore, Generative AI seems to ease the initial work of threat actors, helping them to create malicious code, which they can review and improve for their attacks.

An actor claims they were able to generate ransomware using ChatGPT

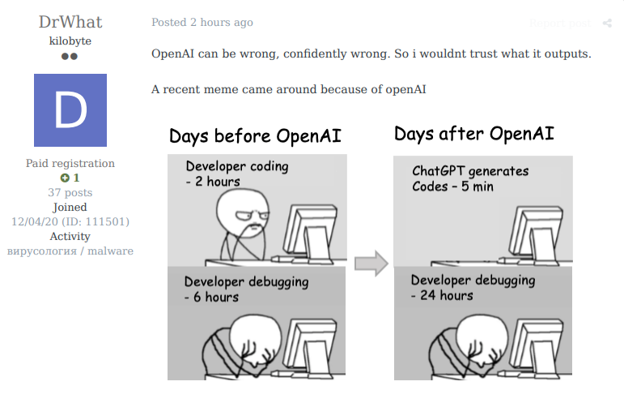

An actor illustrates that AI has some limitations and can provide wrong outputs

Obfuscating malware

In addition to creating malware through Generative AI, threat actors also employ them to improve evasion tactics. In May, a threat actor published a post on a Russian-speaking RAMP forum, showing how to exploit ChatGPT for obfuscating PowerShell, malware, or any other malicious code. This example shows that the cybercrime business model of some actors can change.

Currently, there are many threat actors who provide crypt services for actors who want to obfuscate their malicious executable code and bypass security tools. With the fast performance of Generative AI, these crypt service providers are concerned that malware owners can get better results using one prompt for obfuscating their malware instead of hiring their services, resulting in some of them being replaced by different Generative AI.

An actor complains that AI replaces cybercriminals’ job

Financial fraud activity

Threat actors exploit ChatGPT to generate fraudulent financial and cryptocurrency schemes to trick users into providing their banking details or making unauthorized transactions. KELA observed an actor posting an advertisement on Crime Market, a German cybercrime forum, showing how ChatGPT helped him to create fake cryptocurrency used for fraud. Recently, another actor posted a guide on how to be a scammer. One of his recommendations involves utilizing ChatGPT to generate fabricated reviews for fake products listed on a digital marketplace dedicated to selling various digital goods.

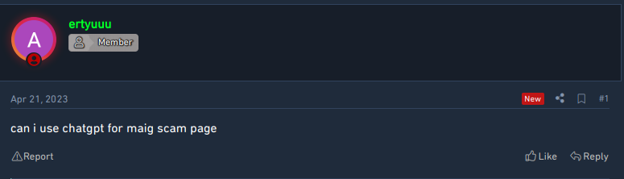

An actor is interested in how to use ChatGPT for a fraud activity

An actor is willing to use ChatGPT for creating a scam page

Another user showed how it is possible to use ChatGPT to access forgotten Bitcoins, trying to recover access to Bitcoin accounts that were lost by their owners. This method can also be used for legitimate purposes when users forgot or lost their digital keys, but in this case, it seems that threat actors can exploit it and steal funds in forgotten wallets.

Exploiting Generative AI’ weaknesses

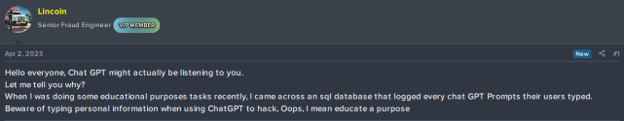

In addition to using Generative AI for their purposes, cybercriminals inevitably target the models themselves. They can exploit potential vulnerabilities in the infrastructure of Generative AI, attempting to gain unauthorized access to the sensitive data that people might share by using Generative AI. In April, an actor claimed that they were able to access an SQL database that logged all the prompts that users used.

Cybercriminals also might craft messages that trick the model into generating specific information, allowing them to gather sensitive corporate or personal data for unauthorized use or sale on cybercrime forums.

An actor says they were able to hack into a ChatGPT users database

Finally, actors don’t need to target Generative AI if they can just use the models’ names in malicious campaigns. KELA has observed some cases where fake sites for sale appear to use ChatGPT’s name. Researchers found that over the past months, there has been a rise in scam attacks related to ChatGPT, using wording and domain names that appear related to the site. In addition, Meta has blocked more than 1,000 unique ChatGPT-themed web addresses designed to deliver malicious software to users’ devices.

Using Generative AI for operating the cybercrime underground

While it’s likely that cybercriminals would mainly use ChatGPT and Generative AI for malicious activities, they also leverage them for their day-to-day operations. Here are several notable examples.

Chatbot development

Threat actors use the API of Generative AI to build chatbots for cybercrime forums and markets, meaning interactive conversational agents that provide assistance, answer questions, or offer automated “customer support.” KELA identified XSSBot, an AI chatbot that has been active on the XSS forum, answering actors’ questions on any topic. It seems that actors are also looking for ways to automate their tasks.

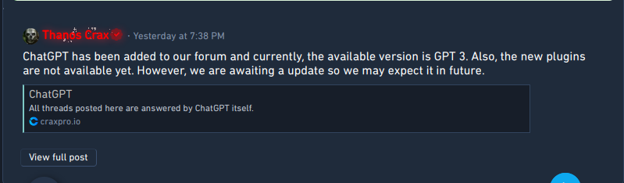

The hacking and carding forum Crax Pro Forum claims to have used the ChatGPT plugin to answer users’ questions.

Crax Pro Forum implemented a ChatGPT plugin for automation

Learning the cybercrime basics

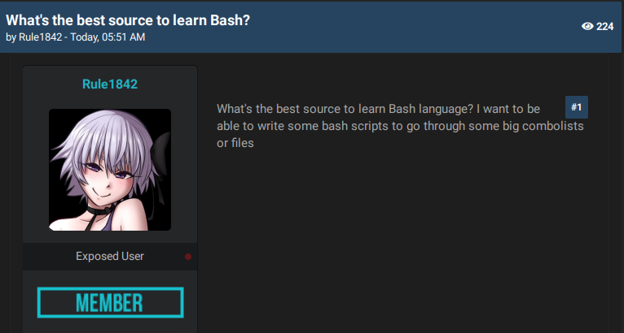

Cybercriminals leverage Generative AI to enhance their technical skills in different cybercrime areas. They engage in conversations with the model to seek guidance, ask technical questions, and explore complex concepts of cybercrime. Such use is common for beginners and eases their way into cybercrime. For example, a user of an underground forum, interested in data leaks, said that ChatGPT helped them learn programming languages.

An actor asks what is the best source to learn Bash language and if users are in favor of using ChatGPT

Website development and design

Similar to non-malicious individuals, threat actors appear to leverage Generative AI to help develop websites, though in this case the websites are malicious in nature, or they serve as platforms to unite cybercriminals. Based on the chatter, actors generate HTML, CSS, and JavaScript code using the model’s language generation capabilities, to develop platforms and reduce their manual work, eventually allowing them to promote their malicious products more easily.

Effective strategies for organizations in tackling AI-related threats

The growing adoption of Generative AI by cybercriminals highlights the importance of researching these tactics and safeguarding Generative AI against malicious intent using real use cases. Alongside proactive monitoring of such discussions within the cybercrime underground, KELA recommends the following steps to the companies involved in the development of Generative AI:

Continuous model monitoring: Implement mechanisms to continuously monitor the behavior and output of Generative AI in real time.

Input validation: Validate and sanitize user inputs before they are inserted into Generative AI. Implement strict input validation to filter out potentially malicious or inappropriate content. This can help prevent models from generating harmful, malicious, misleading, or biased outputs based on crafted prompts sent by cybercriminals.

For security teams utilizing Generative AI, the following measures should be taken:

Regular model updates and patching: Keep Generative AI up to date with the latest security patches and updates. Stay informed about any vulnerabilities or flaws released.

User awareness and education: Educate employees about the risks and limitations associated with Generative AI. Promote awareness of potential manipulation and exploitation techniques that threat actors may employ.

To schedule your personalized demo of AiFort, KELA’s innovative solution for safeguarding companies’ generative AI capabilities, please click here.